PHP script to move files from shared hosting to amazon S3 Buckets

In this article i am going to explain how to copy/move all the files from your shared hosting server to amazon s3. Getting s3 access and how to use it tutors are so many there on net. Try to access those else it will be explained later.

Amazon S3 is really great service to store and retrieve large amount of data with less cost and great security.Shared hosting means most of us are using storage space of 2GB Or 4gb server and that too shared by so many people, so its not only for you. This is not applicable if you are using dedicated server only for you but still you can try out amazon s3 service.

Advantage of doing this task is really great. Here it is....

Eg:

You have 20000 images or some documents that you always giving access to the people to check out. In such case if you are using shared hosting server surely your site will be dead slow to the people who all are accessing. If all the files are coming from some other server then your server only need to present the design then it will be great experience to surfing people. That other server is going to be amazon s3 of-course there are so many services are there for this one but amazon as3 is good. So lets try it.

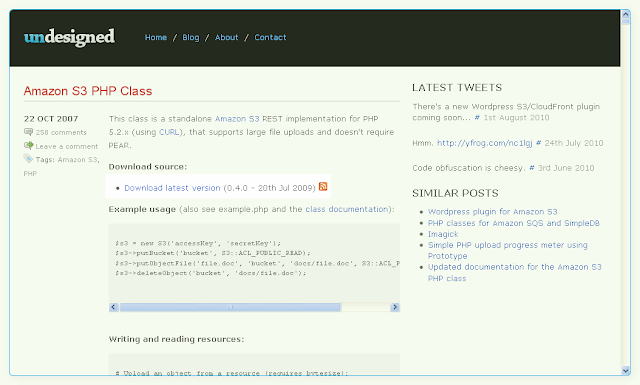

This full tutorial is based on the s3 php class from http://undesigned.org.za/2007/10/22/amazon-s3-php-class, which is very easy to connect to S3 Service and access files/edit/delete/upload files to and from the s3. Download the class and follow thew instructions. and alos i have uploaded a copy of my work which will be helpful for you to do it. try that also.

So lets expand it to upload all your files from the shared hosting server to amazon S3.

In my case i have more than 20000 images in a folder called "[ROOT]inventory/images/" and this number keep on increasing daily. So i planned to move all the files to amazon s3. But here we have a problem, we cant copy all the files at one stretch because its >20,000, so its possible by running it part by part. Either by cronjob or by manual execution. Do s you want and use my script if it is useful for you.

I stored all the file names in a table on database by listing files using the following command. create a table with filename, flagid (which is for task completion and keep it default as 0).

First run the imagelist.php to store all the files in table, then run the imageupload.php. It will upload only 100 images each time to the server,by using limit command. Once the upload process is over update the flag field to 1, so next time it wont be uploaded or even you can delete this field. Its upto you to handle it.

More on this class- here is documentation http://undesigned.org.za/2007/10/22/amazon-s3-php-class/documentation

You can get the copy the source here

Change the db settings and change the table fields as per your need and run it on your server. This tutor is based on nettuts tutor, thanks to nettuts.

Its really some risky task so be careful on working on this. Please comment on it to improve more,and share this post....

Download

Download